In recent posts I have mentioned I am working on a fully autonomous mode using an on-board camera. Here is a video of my first test:

Fully Autonomous Hover Flight [Video Link]

The controller is not used through out the whole video. The flie should hold the position it is dropped from, even if you move it or blow it away.

Ive had it follow some waypoints too:)

Ill document everything in my master thesis in a few months, but if you have questions now, please ask here!

The SLAM system runs in one thread @ 20-30hz, detects and ignores bad images (red frames = bad ones), does deinterlacing (removes scan lines), uses the IMU to speed up/simplify computations (for pose estimation and to pre-rotate descriptors for matching), uses opencv for detecting features (green circles), matching features between frames and keyframes (green lines) and estimates a pose using the inlier matches (via ransac, purple lines).

Here is a picture of initialisation:

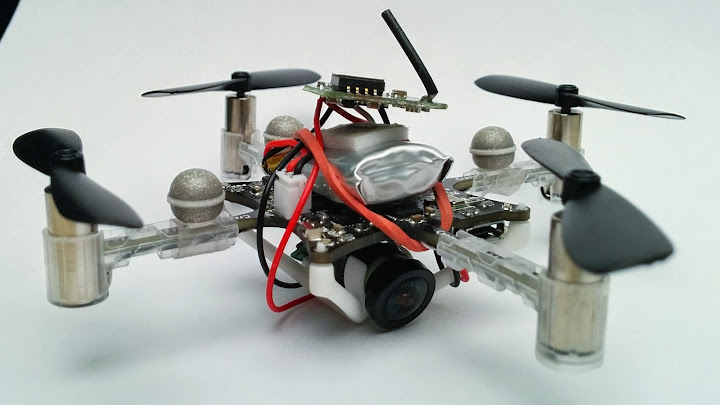

Of the flie and camera system used for the experiment:

And a screen shot from the VO system:

Cheers